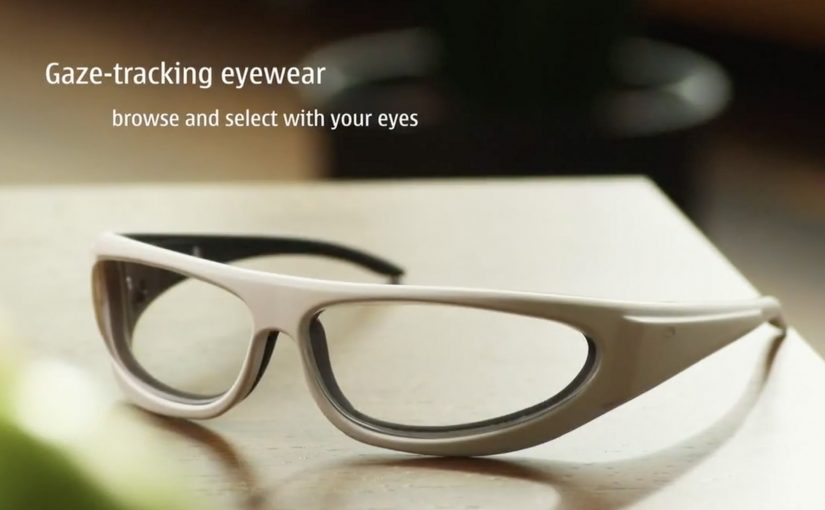

A glimpse into Nokia’s crystal ball comes in the form of its “Mixed Reality” concept video. It strings together a set of interaction ideas: near-to-eye displays, gaze direction tracking, 3D audio, 3D video, gesture, and touch.

The film plays like a day-in-the-life demo. Interfaces float in view. Sound behaves spatially. Attention (where you look) becomes an input. Hands and touch add another control layer, shifting “navigation” from menus to movement.

In consumer technology and UX research, future-vision films are often used to bundle emerging interaction modalities into a single, easy-to-grasp story.

What this video is really doing

It is less a product announcement and more a “stack sketch.” You can read it as a prototype of how computing might feel when screens move closer to the eye, audio becomes directional, and interface elements follow attention rather than clicks.

Standalone takeaway: The fastest way to communicate a complex interaction future is to show one human routine and let multiple inputs. gaze, gesture, touch, audio. naturally layer into it without explanation.

The mechanism: attention as input, environment as output

The core mechanic is gaze-led discovery. If your eyes are already pointing at something, the system treats that as intent. Gesture and touch then refine or confirm. 3D audio becomes a navigation cue, guiding you to people, objects, or information without forcing you to stare at a map-like UI.

Why it lands: it reduces “interface effort”

Even as a concept, the appeal is obvious. It tries to remove the friction of hunting through apps and menus. Instead, information comes to where you are looking, and actions feel closer to how you already move in the world.

That is also the risk. If a system reacts too eagerly to gaze or motion, it can feel jumpy or intrusive. The design challenge is making the interface feel calm while still being responsive.

What Nokia is positioning

This vision implicitly reframes the phone from “a screen you hold” into “a personal perception layer.” It suggests a brand future built on research-led interaction design rather than only on industrial design or hardware specs.

What to steal for your own product and experience work

- Design around one primary input. If gaze is the lead, make gesture and touch supporting, not competing.

- Use spatial audio as a UI primitive. Direction and distance can be an interface, not just a soundtrack.

- Show intent, then ask for confirmation. Let the system suggest based on attention, but require an explicit action to commit.

- Keep overlays purposeful. Persistent HUD clutter kills trust. Reveal only what helps in the moment.

- Prototype the “feel,” not just the screens. Latency, comfort, and social acceptability decide whether this works in real life.

A few fast answers before you act

What is Nokia “Mixed Reality” in this context?

It is a concept vision of future interaction that combines near-to-eye displays with gaze tracking, spatial audio, gesture, and touch to make navigation feel more ambient and less menu-driven.

What does “near-to-eye display” mean?

A display positioned close to the eye. often glasses-style. that can place digital information in your field of view without requiring you to hold up a phone screen.

How does gaze tracking change interface design?

It lets the system infer what you are attending to, so selection and navigation can start from where you look. Good designs still require a secondary action to confirm, to avoid accidental triggers.

Why include 3D audio in a mixed reality interface?

Because sound can guide attention without demanding visual focus. Directional cues can help you locate people, alerts, or content while keeping your eyes on the real environment.

What is the biggest UX risk with gaze and gesture interfaces?

Unwanted activation. If the interface reacts to normal eye movement or casual gestures, it feels unstable. The cure is clear feedback plus deliberate “confirm” actions.