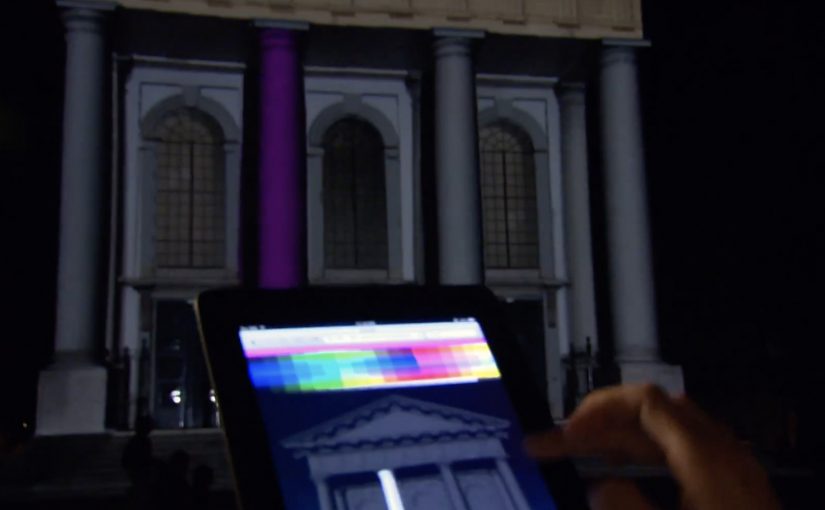

A 190m² LED city surface that reacts to people

Audi, to showcase its A2 concept at Design Miami 2011, created a 190 m2 three-dimensional LED surface that provided a glimpse of the future of our cities where infrastructure and public space is shared between pedestrians and driverless cars. The installation demonstrated how the city surface would continuously gather information about people’s movements and allow vehicles to interact with the environment.

The installation used a real-time graphics engine and tracking software that received live inputs from 11 Xbox Kinect cameras mounted above the visitors’ heads. Through the cameras, the movement of the visitors was processed into patterns of movement displayed on the LED surface.

The punchline: the street becomes an interface

This is a future-city story told through interaction, not a render. You do not watch a concept. You walk on it. The floor responds, and suddenly “data-driven public space” is something you can feel in your body.

In smart city and mobility innovation, the fastest way to make future infrastructure feel believable is to turn sensing and responsiveness into a physical interaction people can experience in seconds.

Why it holds your attention

Because it turns an abstract topic. infrastructure sharing, sensing, autonomous behavior. into a single, legible experience. Your movement creates immediate visual feedback, and that feedback makes the bigger idea believable for a moment.

What Audi is signaling here

A vision of cities where surfaces sense movement continuously and systems adapt in real time. Not just cars that navigate, but environments that respond.

What to steal for experiential design

- Translate complex futures into one physical interaction people can understand instantly.

- Use real-time feedback loops. Input, processing, output. so the concept feels alive.

- Make the visitor the driver of the demo. Their movement should generate the proof.

A few fast answers before you act

What did Audi build for Design Miami 2011?

A 190 m2 three-dimensional LED surface installation showcasing an “urban future” concept tied to the Audi A2 concept.

What was the installation demonstrating?

A future city surface that continuously gathers information about people’s movements and enables vehicles to interact with the environment.

How was visitor movement captured?

The post says 11 Xbox Kinect cameras mounted above visitors’ heads provided live inputs to tracking software.

What was the core mechanic?

Real-time tracking of visitor movement translated into dynamic patterns displayed on the LED surface, visualizing how a responsive city surface might behave.