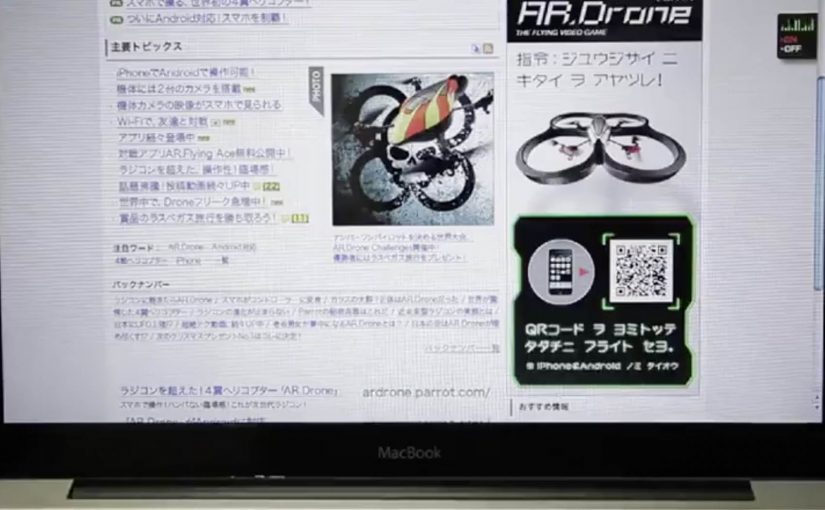

The Parrot AR.Drone is a quadrotor you can control with an iPhone or iPad. Instead of explaining that in copy, Beacon Communications Tokyo built an interactive web banner that lets people experience the idea.

The banner displays a QR code. Scan it and your phone becomes the controller for a virtual AR.Drone that appears inside the banner. You pilot it around the screen using your smartphone, effectively turning the ad into a small playable product demo.

In consumer electronics launches, the most persuasive interactive advertising is a playable demo that mirrors the product experience in seconds.

Why this banner stands out

Most banners talk about what a product can do. This one makes the product behaviour the message. If the AR.Drone is “controlled by your phone,” the ad is controlled by your phone. That direct mapping makes the idea instantly believable.

Standalone takeaway: If your product is an interface, let the audience use that interface inside the ad unit, even in a simplified form.

The mechanic: QR to second screen control

The QR code is not decoration. It is the bridge that turns a passive placement into a two-device experience. The banner stays on the desktop screen. Control moves to the phone. That split makes the interaction feel closer to the real product, and it also creates a small sense of “this is special” because the ad is no longer self-contained.

How it creates attention without shouting

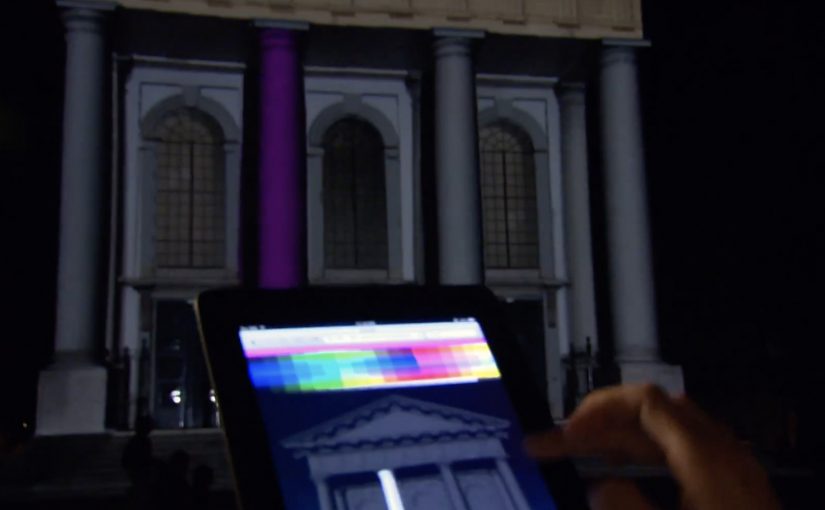

As described in industry coverage, users could fly the drone around the page and even “blast” parts of the site to reveal the full-screen message. That gives the interaction a purpose and a payoff. It is not just movement. It is progression.

Beacon also reported unusually strong click-through performance compared to typical expectations for the placement. In this case, that makes sense. People do not click because they were interrupted. They click because they were already playing.

What to steal from The Flying Banner

- Replicate the product, do not describe it. A short, real interaction beats a long explanation.

- Use one clear bridge between devices. QR works here because it is immediate and simple.

- Design an obvious payoff. A reveal, a score, a result. Give the interaction a reason.

- Keep the controls teachable. If people cannot learn it in seconds, the banner loses them.

- Make it readable for spectators. Movement on the main screen helps others understand what is happening fast.

A few fast answers before you act

What is “The Flying Banner” for Parrot AR.Drone?

It is an interactive web banner where scanning a QR code turns your smartphone into a controller for a virtual AR.Drone that you can pilot inside the banner.

Why is this a stronger demo than a normal video ad?

Because it lets people feel the core promise. Phone-controlled flight. through direct interaction, not description.

What role does the QR code play in the experience?

It is the handoff mechanism from desktop to phone. The desktop shows the “world.” The phone becomes the controller, matching how the real product is used.

What is the biggest risk with multi-device banner ideas?

Drop-off. If the connection step is slow, confusing, or unreliable, most users abandon before they experience the payoff.

How would you modernize this mechanic today?

Keep the principle of second-screen control, but reduce friction. Use a fast connect flow and ensure the experience is still satisfying even if someone chooses not to connect a phone.