Airports are crowded with people from different backgrounds. This Christmas, KLM brings them together with Connecting Seats. Two seats that translate every language in real time, so people with different cultures, world views, and languages can understand each other.

The experience design move

KLM does not try to tell a holiday message. It creates a small, human interaction in a high-friction environment. You sit down. You speak normally. The barrier between strangers is reduced by the seat itself.

Why this works as a Christmas idea

Christmas campaigns often rely on film and sentiment. This one uses participation. It makes connection visible. It gives the brand a role that feels practical rather than promotional.

The pattern to steal

If you want to create brand meaning in public spaces, this is a strong structure:

- Pick a real-world tension people already feel (crowded, anonymous, culturally mixed spaces).

- Introduce a simple intervention that changes behaviour in the moment.

- Let the interaction carry the message, not a slogan.

A few fast answers before you act

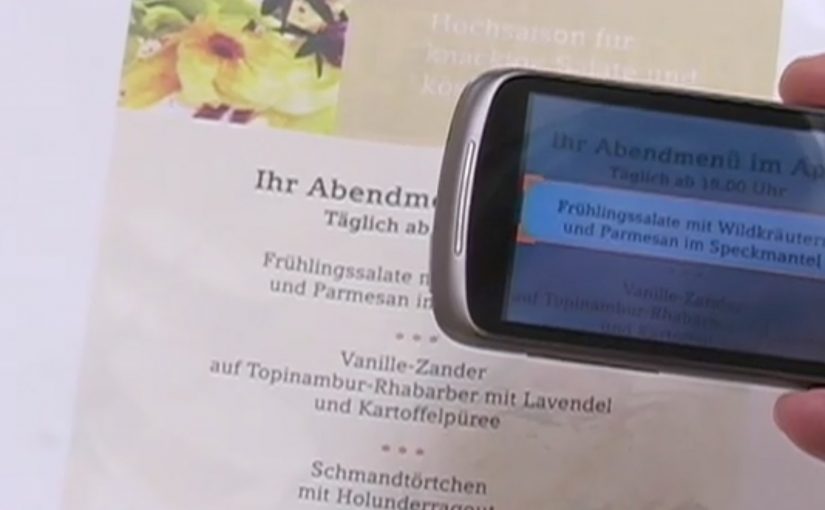

What are KLM Connecting Seats?

Two seats designed to translate language in real time, so strangers can understand each other.

Where does this idea make sense operationally?

In airports and other transient spaces where people from different backgrounds sit near each other but rarely interact.

What is the core brand outcome?

A memorable, lived proof of “bringing people together,” delivered through an experience rather than a claim.