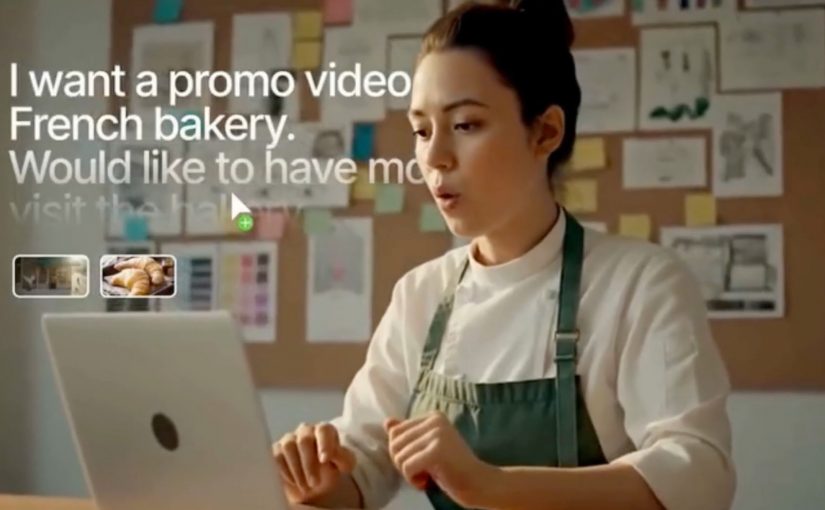

InVideo just dropped a campaign that might be one of the sharpest AI ads to date. Or one of the most controversial.

Not because the ad itself is “good” or “bad.” But because of what it demonstrates.

The premise is simple. A local business wants awareness and local footfall. A single prompt arrives. Then a “creative team” appears on screen. A writer, director, producer, and sound designer. They brainstorm, storyboard, pull assets, debate tone, change direction midstream, swap narrators, land a punchline, and ship a finished promo.

The twist is that the “team” is not human. It is AI agents collaborating in real time.

Some people will see this and think: finally, creativity at the speed of thought. Others will see it and think: here comes manufactured content. At industrial scale.

So let’s unpack what’s actually happening here. Not the hype. Not the fear. The shift.

What this campaign is really showing

On the surface, it’s a product story.

Under the surface, it’s a proof-of-concept for a new production model. Prompt-to-video, orchestrated by role-based agents, pulling from your assets, and iterating like a team would.

That matters because we are crossing a line:

- Yesterday: AI helped you edit.

- Today: AI can generate components.

- Now: AI attempts to run the full production loop. Brief to concept to execution to polish.

If that sounds incremental, it isn’t. The bottleneck in content has never been “ideas.” It has been translation. Turning intent into something shippable, on brand, on time, and fit for a channel.

This is what changes. The translation cost collapses.

The “agents” idea. Why it clicks so hard

Most AI video tooling gets described as features: text-to-video, voiceover, stock replacement, templates.

Agents are a different mental model. They mimic how work gets done.

Instead of one tool trying to be everything, you have multiple role-based systems that divide the labor:

- Writer: Hook, script, narrative beats

- Director: Framing, pacing, scene intent

- Producer: Assets, structure, feasibility, assembly

- Sound designer: Voice, music cues, timing, emphasis

The output is not just “a video.” It’s a workflow that looks like collaboration.

And that’s why the campaign is sticky. It doesn’t just show a capability. It shows an operating model.

Fast definition. What “AI agents” means in this context

AI agents are role-based AI workers that take responsibility for a portion of the task, coordinate with other roles, and iteratively refine toward a shared goal.

In practical terms, this is orchestration. Task decomposition. Decision loops. And multi-step iteration that feels closer to a real production process than a single prompt and a single output.

In enterprise marketing teams, agentic video tools compress production time while making governance, briefing quality, and brand standards the real constraints.

Why the bakery storyline matters. It’s not about video

The reason this lands is the bakery.

A small business is a stand-in for every team that has historically been excluded from “premium” creative production. Not because they lacked ideas, but because they lacked:

- Budget

- Time

- Specialist talent

- Access to production infrastructure

If AI production becomes cheap and fast, a new baseline emerges.

Customer expectations tend to move in one direction. Up.

In other industries we’ve seen this pattern repeatedly:

- Shipping went from weeks to days. Then days to “why isn’t it here tomorrow?”

- Support went from office hours to 24/7 chat.

- Information went from gatekept to instant.

Content is heading the same way.

When a local business can generate credible, channel-ready creative quickly, the competitive advantage shifts away from “who can produce” and toward “who can differentiate.”

So is this the future of content. Or a shortcut that kills creativity?

Both outcomes are plausible, because the tool is not the strategy.

Here are the three trajectories I think matter.

1) Creativity gets unlocked for more people

AI reduces the friction between an idea and a first draft. That can empower founders, small teams, educators, non-profits, internal comms teams, and marketers who have always had the brief but not the bandwidth.

If you’ve ever had a good concept die in a doc because production was too heavy, you know how big this is.

The upside version of the future looks like:

- More experimentation

- More niche creativity

- More localized storytelling

- Faster learning cycles

2) The internet floods with “content wallpaper”

When production becomes cheap, volume spikes. When volume spikes, attention gets harder. When attention gets harder, teams chase what performs. When teams chase what performs, sameness creeps in.

The downside version of the future looks like:

- Infinite mediocre ads

- Homogenized pacing and tone

- Interchangeable visual language

- “Good enough” content dominating feeds

That’s the fear behind “slop at scale.” Not that content exists. That it becomes meaningless.

3) Premium creative becomes more premium

There is a third outcome that’s often missed.

When baseline production becomes abundant, true differentiation becomes rarer.

Human advantages do not disappear. They concentrate around the things AI struggles with reliably:

- Strategy and intent. What are we trying to change in the market?

- Cultural nuance. What does this mean here, with these people?

- Original point of view. What do we stand for that others don’t?

- Brand taste. What is “on brand” beyond templates?

- Ethical judgment. What should we not do even if we can?

- Lived insight. What’s the human truth behind the message?

In that world, AI does not replace creative leaders. It raises the bar on them.

The practical question every marketing leader needs to answer

People debate whether AI can “replace creatives.” That’s not the operational question.

The operational question is: Where do you want humans to be irreplaceable, and where do you want machines to be fast?

Because if AI handles production, your competitive edge moves to:

- The quality of your briefs

- The clarity of your brand system

- The strength of your POV

- The governance of your outputs

- The measurement of creative impact

- The speed of iteration without brand drift

A simple maturity test you can run this week

If AI can produce at scale, the risk is not “bad videos.” It’s unmanaged systems.

Ask this:

Who owns the continuous loop of prompting, testing, learning, scaling, and deprecating AI-driven creative workflows in your organization?

If the answer is “no one,” you don’t have an AI capability. You have scattered experiments.

My take

Production is getting cheaper. Differentiation is getting harder.

So the real decision is not whether you can generate more content. It’s whether you can scale output without losing taste, brand truth, and accountability.

Is this the future of content. Or a shortcut that kills creativity? It depends on who owns the brief, who owns the guardrails, and who is willing to say no.

A few fast answers before you act

Can AI agents replace a creative team?

AI agents can replicate parts of the production workflow and speed up iteration. They do not automatically replace strategy, taste, or cultural judgment. Those still require accountable humans.

What does “prompt-to-video” actually mean?

Prompt-to-video is the ability to turn a single idea into a finished video. Script, scenes, voice, music, edit, and formatting. Without traditional filming or manual timeline editing.

Will this create more generic ads?

It can, especially when teams optimize for speed over differentiation. The antidote is strong briefs, clear brand constraints, and human ownership of taste and intent.