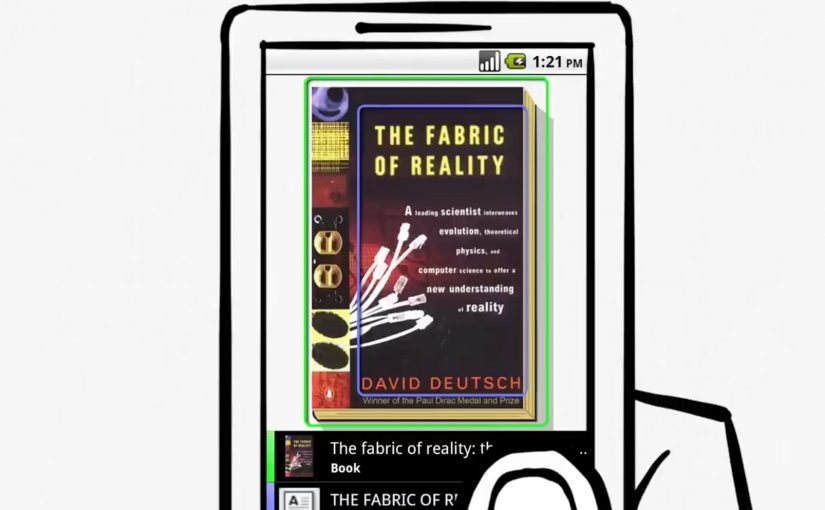

You take an Android phone, snap a photo, tap a button, and Google treats the image as your search query. It analyses both imagery and text inside the photo, then returns results based on what it recognises.

Before that, the iPhone already has an app that lets users run visual searches for price and store details by photographing CD covers and books. Google now pushes the same behaviour to a broader, more general-purpose level.

What Google Goggles changes in visual search

This is not a novelty camera trick. It is a shift in input. The photo becomes the query, and the system works across:

- What the image contains (visual recognition).

- What the image says (text recognition).

Scale is the enabling factor

Google positions this as search at internet scale, not a small database lookup. The index described here includes 1 billion images.

Why this matters beyond “cool tech”

When the camera becomes a search interface, the web becomes more accessible in moments where typing is awkward or impossible. You can point, capture, and retrieve meaning in a single flow, using the environment as the starting point.

A few fast answers before you act

What does Google Goggles do, in one sentence?

It lets you take a photo on an Android phone and uses the imagery and text in that photo as your search query.

What is the comparison point mentioned here?

An iPhone app already enables visual searches for price and store details via photos of CD covers and books.

What is the scale of the image index described?

1 billion images.

What is included as supporting proof in the original post?

A demo video showing the visual search capability.