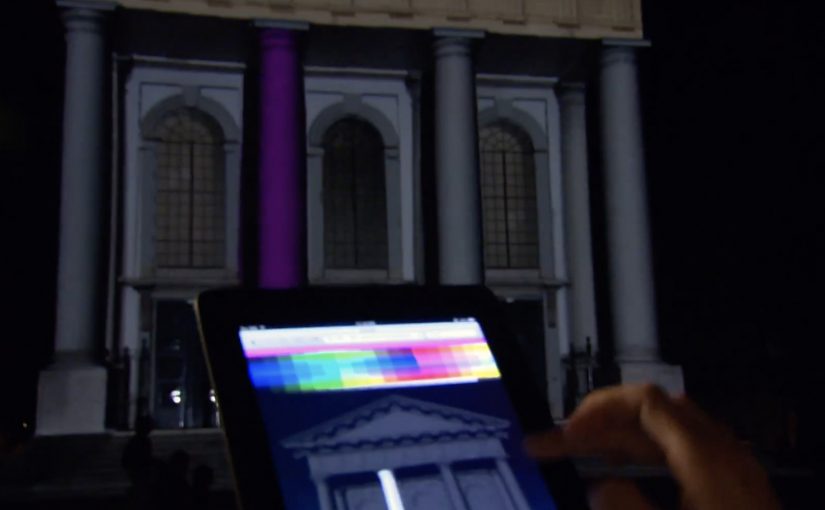

NuFormer, after executing 3D video mapping projections onto objects and buildings worldwide, adds interactivity to the mix in this test.

Here the spectators become the controller and interact with the building in real time using gesture-based tracking (Kinect). People influence the projected content using an iPad, iPhone, or a web-based application available on both mobile and desktop. For this test, Facebook interactivity is used, but the idea is that other social media signals can also be incorporated.

In large-scale public brand experiences, projection mapping becomes more than spectacle when it gives the crowd meaningful viewer control instead of a one-way show.

From mapped surface to live interface

Projection mapping usually works like a film played on architecture. This flips it into a live system. The building is still the canvas, but the audience becomes an input layer. Gesture tracking drives the scene changes, and second-screen control extends participation beyond the people standing closest to the sensor.

Standalone takeaway: Interactive mapping is most compelling when the control model is instantly legible (wave, move, tap) and the projection responds quickly enough that people trust the cause-and-effect.

Why the “crowd as controller” move matters

Interactivity changes what people remember. A passive crowd remembers visuals. An active crowd remembers ownership. The moment someone realises their movement, phone, or social input changes the facade, the projection stops being “content” and becomes “play.”

That also changes the social dynamics around the installation. People look for rules, teach each other controls, and stick around to try again. The result is longer dwell time and more organic filming, because participation is the story.

What brands can do with this, beyond a tech demo

As described in coverage and in NuFormer’s own positioning, branded content, logos, or product placement can be incorporated into interactive projection applications. The strategic upside is that you can design a brand moment that is co-created by the crowd, rather than merely watched.

When social signals are part of the input (Facebook in this case), the experience can also create a bridge between the physical venue and online participation. That hybrid loop is where campaigns can scale.

What to steal for your next mapping brief

- Pick one primary control. Gesture, phone, or web. Then add a secondary layer only if it increases participation rather than confusion.

- Make feedback immediate. The projection must respond fast or people assume it is fake or broken.

- Design for “spectator comprehension.” Bystanders should understand what changed and why, from a distance.

- Use social inputs carefully. Keep the mapping between input and output obvious so it feels fair, not random.

- Plan for crowd flow. Interactive mapping is choreography. Sensors, sightlines, and safe space matter as much as visuals.

A few fast answers before you act

What is “interactive projection mapping” in this NuFormer test?

It is 3D projection mapping where the projected content changes in real time based on audience input. Here that input includes Kinect gesture tracking plus control via iPad, iPhone, and web interfaces.

Why add phones and web control when you already have gesture tracking?

Gesture tracking usually limits control to people near the sensor. Second-screen control expands participation to more people and enables a clearer “turn-taking” interaction model.

How does Facebook interactivity fit into a projection experience?

It acts as an additional input stream, letting social actions influence what appears on the building. The key is to make the mapping from social action to visual change understandable.

What is the biggest failure mode for interactive mapping?

Latency and ambiguity. If the response is slow or the control rules are unclear, crowds disengage quickly because they cannot tell whether their input matters.

What should a brand measure in an interactive mapping activation?

Dwell time, participation rate (people who trigger changes), repeat interaction, crowd size over time, and the volume and quality of user-captured video shared during the event window.