The pilot phase is over. “Use” loses. “Integrate” wins.

Those who merely use AI will lose. Those who integrate AI will win. The experimentation era produced plenty of impressive demos. Now comes the part that separates winners from tourists. Making AI an operating capability that compounds.

Most organizations are still stuck in tool adoption. A team runs a prompt workshop. Marketing trials a copy generator. Someone adds an “intelligent chatbot” to the website. Useful, yes. Transformational, no.

The real shift is “use vs integrate”. Because the differentiator is not whether you have access to AI. Everyone does. The differentiator is whether you can make AI repeatable, governed, measurable, and finance-credible across workflows that actually move revenue, cost, speed, and quality.

If you want one question to sanity-check your AI maturity, it is this.

Who owns the continuous loop of scouting, testing, learning, scaling, and deprecating AI capabilities across the business?

What “integrating AI” actually means

Integration is not “more prompts”. It is process integration with an operating model around it.

In practice, that means treating AI like infrastructure. Same mindset as data platforms, identity, and analytics. The value comes from making it dependable, safe, reusable, and measurable.

Here is what “AI as infrastructure” looks like when it is real:

- Data access and permissions that are designed, not improvised. Who can use what data, through which tools, with what audit trail.

- Human-in-the-loop checkpoints by design. Not because you distrust AI. Because you want predictable outcomes, accountability, and controllable risk.

- Reusable agent patterns and workflow components. Not one-off pilots that die when the champion changes teams.

- A measurement layer finance accepts. Clear KPI definitions, baselines, attribution logic, and reporting that stands up in budget conversations.

This is why the “pilot phase is over”. You do not win by having more pilots. You win by building the machinery that turns pilots into capabilities.

In enterprise operating models, AI advantage comes from repeatable workflow integration with governance and measurement, not from accumulating tool pilots.

The bottleneck is collapsing. But only for companies that operationalize it

A tangible shift is the collapse of specialist bottlenecks.

When tools like Lovable let teams build apps and websites by chatting with AI, the constraint moves. It is no longer “can we build it”. It becomes “can we govern it, integrate it, measure it, and scale it without creating chaos”.

The same applies to performance management. The promise of automated scorecards and KPI insights is not that dashboards look nicer. It is that decision cycles compress. Teams stop arguing about what the number means, and start acting on it.

But again, the differentiator is not whether someone can generate an app or a dashboard once. The differentiator is whether the organization can make it repeatable and governed. That is the gap between AI theatre and AI advantage.

Ownership. The million-dollar question most companies avoid

I still see many organizations framing AI narrowly. Generating ads. Drafting social posts. Bolting a chatbot onto the site.

Those are fine starter use cases. But they dodge the million-dollar question. Who owns AI as an operating capability?

In my view, it requires explicit, business-led accountability, with IT as platform and risk partner. Two ingredients matter most.

- A top-down mandate with empowered change management

Leaders need a shared baseline for what “integration” implies. Otherwise, every initiative becomes another education cycle. Legal and compliance arrive late. Momentum stalls. People get frustrated. Then AI becomes the next “tool rollout” story. This is where the mandate matters. Not as a slogan, but as a decision framework. What is in scope. What is out of scope. Which risks are acceptable. Which are not. What “good” looks like.

- A new breed of cross-functional leadership

Not everyone can do this. You need a leader whose superpower is connecting the dots across business, data, technology, risk, and finance. Not a deep technical expert, but someone with strong technology affinity who asks the right questions, makes trade-offs, and earns credibility with senior stakeholders. This leader must run AI as an operating capability, not a set of tools.

Back this leader with a tight leadership group that operates as an empowered “AI enablement fusion team”. It spans Business, IT, Legal/Compliance, and Finance, and works in an agile way with shared standards and decision rights. Their job is to move fast through scouting, testing, learning, scaling, and standardizing. They build reusable patterns and measure KPI impact so the organization can stop debating and start compounding.

If that team does not exist, AI stays fragmented. Every function buys tools. Every team reinvents workflows. Risk accumulates quietly. And the organization never gets the benefits of scale.

AI will automate the mundane. It will transform everything else

Yes, AI will automate mundane tasks. But the bigger shift is transformation of the remaining work.

AI changes what “good” looks like in roles that remain human-led. Strategy becomes faster because research and synthesis compress. Creative becomes more iterative because production costs drop. Operations become more adaptive because exception handling becomes a core capability.

The workforce implication is straightforward. Your advantage will come from people who can direct, verify, and improve AI-enabled workflows. Not from people who treat AI as a toy, or worse, as a threat.

There is no one AI tool to rule them all

There is no single AI tool that solves everything. The smart move is to build an AI tool stack that maps to jobs-to-be-done, then standardize how those tools are used.

Also, not all AI tools are worth your time or your money. Many tools look great in demos and disappoint in day-to-day execution.

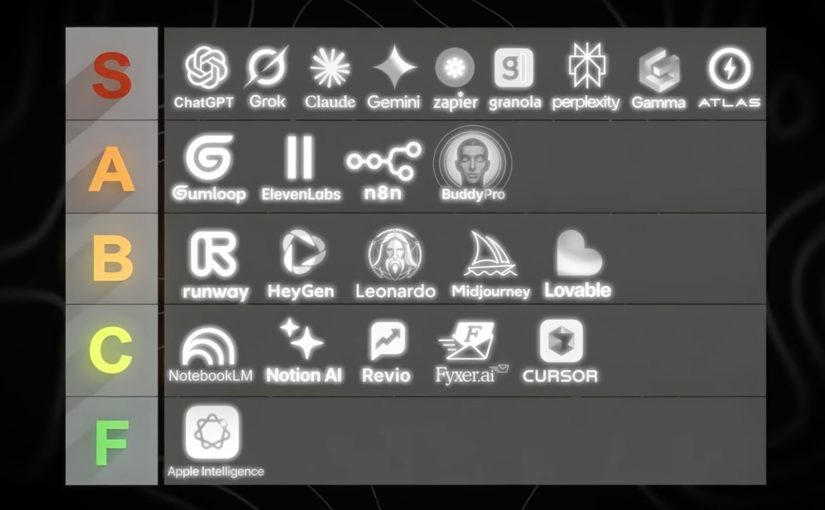

So here is a practical way to think about the landscape. A stack, grouped by what the tool does.

Here is one good example of a practical AI tool stack by use case

Foundation models and answer engines

- ChatGPT: General-purpose AI assistant for reasoning, writing, analysis, and building lightweight workflows through conversation.

- Claude (Anthropic): General-purpose AI assistant with strong long-form writing and document-oriented workflows.

- Gemini (Google): Google’s AI assistant for multimodal tasks and deep integration with Google’s ecosystem.

- Grok (xAI): General-purpose AI assistant positioned around fast conversational help and real-time oriented use cases.

- Perplexity AI: Answer engine that combines web-style retrieval with concise, citation-forward responses.

- NotebookLM: Document-grounded assistant that turns your sources into summaries, explanations, and reusable knowledge.

- Apple Intelligence: On-device and cloud-assisted AI features embedded into Apple operating systems for everyday productivity tasks.

Creative production. Image, video, voice

- Midjourney: High-quality text-to-image generation focused on stylized, brandable visual outputs.

- Leonardo AI: Image generation and asset creation geared toward design workflows and production-friendly variations.

- Runway ML: AI video generation and editing tools for fast content creation and post-production acceleration.

- HeyGen: Avatar-led video creation for localization, explainers, and synthetic presenter formats.

- ElevenLabs: AI voice generation and speech synthesis for narration, dubbing, and voice-based experiences.

Workflow automation and agent orchestration

- Zapier: No-code automation for connecting apps and triggering workflows, increasingly AI-assisted.

- n8n: Workflow automation with strong flexibility and self-hosting options for technical teams.

- Gumloop: Drag-and-drop AI automation platform that connects data, apps, and AI into repeatable workflows.

- YourAtlas: AI sales agent that engages leads via voice, SMS, or chat, qualifies them, and books appointments or routes calls without humans.

Productivity layers and knowledge work

- Notion AI: AI assistance inside Notion for writing, summarizing, and turning workspace content into usable outputs.

- Gamma: AI-assisted creation of presentations and documents with fast narrative-to-slides conversion.

- Granola AI: AI notepad that transcribes your device audio and produces clean meeting notes without a bot joining the call.

- Buddy Pro AI: Platform that turns your knowledge into an AI expert you can deploy as a 24/7 strategic partner and revenue-generating asset.

- Revio: AI-powered sales CRM that automates Instagram outreach, scores leads, and provides coaching to convert followers into revenue.

- Fyxer AI: Inbox assistant that connects to Gmail or Outlook to draft replies in your voice, organize email, and automate follow-ups.

Building software faster. App builders and AI dev tools

- Lovable: Chat-based app and website builder that turns requirements into working product UI and flows quickly.

- Cursor AI: AI-native code editor that accelerates coding, refactoring, and understanding codebases with embedded assistants.

Why this video is worth your time

Tool lists are everywhere. What is rare is a ranking based on repeated, operational exposure across real businesses.

Dan Martell frames this in a way I like. He treats tools as ROI instruments, not as shiny objects. He has tested a large number of AI tools across his companies, then sorts them into what is actually worth adopting versus what is hype.

That matters because most teams do not have a tooling problem. They have an integration problem. A “best tools” list only becomes valuable when you connect it to your operating model, your workflows, your governance, and your KPI layer.

The takeaway for digital leaders

If you are a CDO, CIO, CMO, or you run digital transformation in any serious way, here is the practical stance.

- Stop optimising for pilots. Start optimising for capabilities.

- Decide who owns the continuous loop. Make it explicit. Fund it properly.

- Build reusable patterns with governance. Measure what finance accepts.

- Treat tools as interchangeable components. Your real advantage is the operating model that lets you reuse, scale, and improve AI capabilities over time.

That is what “integrate” means. And that is where the winners will be obvious.

A few fast answers before you act

What does “integrating AI” actually mean?

Integrating AI means embedding AI into core workflows with clear ownership, governance, and measurement. It is not about running more pilots or using more tools. It is about making AI repeatable, auditable, and finance-credible across the workflows that drive revenue, cost, speed, and quality.

What is the difference between using AI and integrating AI?

Using AI is ad hoc and tool-led. Teams experiment with prompts, copilots, or point solutions in isolation. Integrating AI is workflow-led. It standardizes data access, controls, reusable patterns, and KPIs so AI outcomes can scale across the organization.

What is the simplest way to test AI maturity in an organization?

Ask who owns the continuous loop of scouting, testing, learning, scaling, and deprecating AI capabilities. If no one owns this end to end, the organization is likely accumulating pilots and tools rather than building an operating capability.

What does “AI as infrastructure” look like in practice?

AI as infrastructure includes standardized access to data, policy-based permissions, auditability, human-in-the-loop checkpoints, reusable workflow components, and a measurement layer that links AI activity to business KPIs.

Why do governance and measurement matter more than AI tools?

Because tools are easy to demo and hard to scale. Governance protects quality and compliance. Measurement protects budgets. Without baselines and attribution that finance trusts, AI remains experimentation instead of an operating advantage.

What KPIs make AI initiatives finance-credible?

Common KPIs include cycle-time reduction, cost-to-serve reduction, conversion uplift, content throughput, quality improvements, and risk reduction. What matters most is agreeing on baselines and attribution logic with finance upfront.

What is a practical first step leaders can take in the next 30 days?

Select one or two revenue or cost workflows. Define the baseline. Introduce human-in-the-loop checkpoints. Instrument measurement. Then standardize the pattern so other teams can reuse it instead of starting from scratch.