Women are always looking for inspiration for their wardrobe and most of the time they find this inspiration by looking at other women.

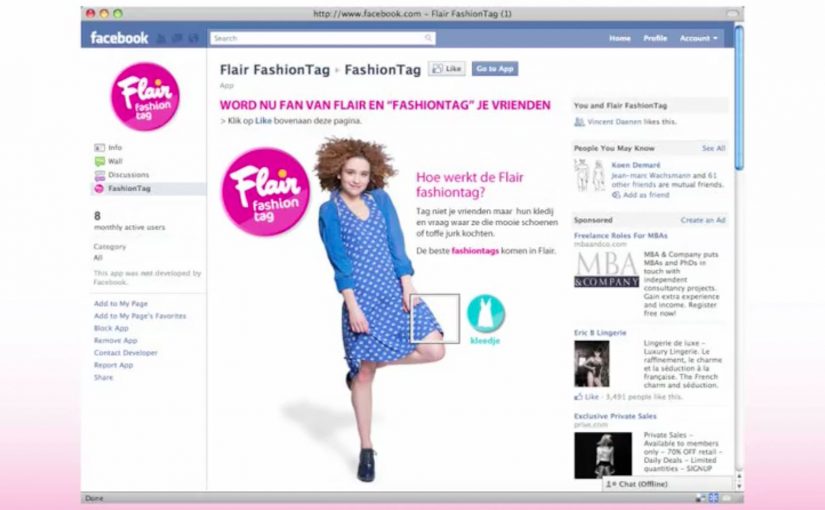

This inspired agency Duval Guillaume to create a Flair Fashiontag Facebook app for Belgian women’s magazine Flair. In the app, instead of tagging people, you can tag people’s clothes or accessories and ask them where they got them.

All fashiontags are displayed in a Facebook gallery, and the best are published in the weekly edition of Flair. This way there is constant interaction between the Facebook application and the magazine itself.

Turning social curiosity into a repeatable format

The mechanism is a simple swap. Replace social tagging of people with social tagging of products. A photo becomes a shoppable question. The owner of the outfit becomes the source. The magazine becomes the curator that elevates the best finds from feed to print.

In fashion and lifestyle publishing, converting casual “where did you get that” moments into a structured loop is a practical way to keep community activity and editorial output feeding each other.

Why it lands

This works because it formalizes a behavior that already exists. People already look at outfits, notice details, and ask friends for sources. Fashiontag simply gives that behavior a native interface and a public gallery, then adds a prestige layer by featuring the best tags in the weekly magazine.

Extractable takeaway: If your audience already asks each other for product sources, build a lightweight format that captures those questions in the moment and rewards the best contributions with visible amplification.

What to steal

- Swap the object of attention: tag the item, not the person, when product discovery is the real intent.

- Close the loop with curation: a gallery is useful. Editorial selection makes it aspirational.

- Make participation low-friction: one tag, one question, one shareable output.

- Bridge channels on purpose: use print, site, and social as a single system, not separate campaigns.

- Protect the social contract: ensure the person in the photo is comfortable with tagging and featuring, especially when content moves into a magazine.

A few fast answers before you act

What is Flair Fashiontag?

It is a Facebook app for Flair magazine that lets users tag clothes or accessories in photos and ask where those items were purchased.

What makes it different from normal photo tagging?

Normal tagging identifies people. Fashiontag identifies items. It turns fashion curiosity into a structured question-and-answer interaction.

How does the magazine benefit from the Facebook app?

The app creates a steady flow of wardrobe inspiration and real questions from readers. The magazine then curates and publishes the best tags, which reinforces participation.

Why is this a strong community mechanic?

Because it rewards helpfulness. People contribute sources and recommendations, and the gallery plus print selection turns that help into recognition.

What is the biggest risk in this format?

Consent and comfort. Tagging items in someone’s photo can feel intrusive if the person did not opt in, especially if content can be featured publicly in print.